Dataflow Pipeline

Dataflow Pipeline

Software: DataBricks, MS Excel

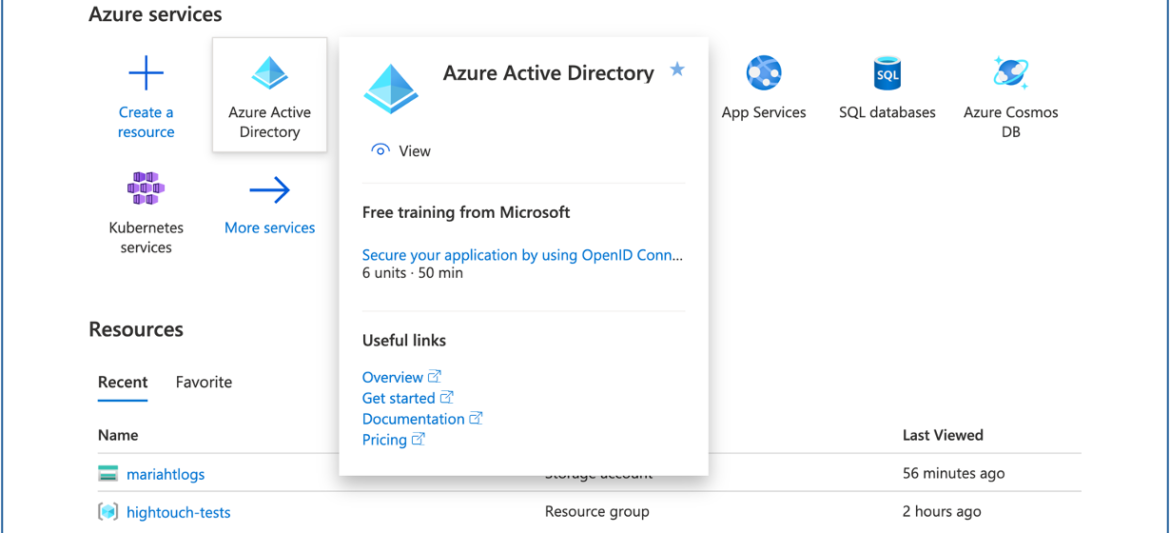

Database: MS Azure, National Reporting System

Programming: Python (PySpark)

Database: MS Azure, National Reporting System

Programming: Python (PySpark)

Objectives

Automate data ingestion from external databases via a national reporting system.

Streamline data processing for near real-time analytics and reporting.

Leverage API to aggregate and integrate data.

Implement standardized case definitions to query the database.

Ensure high-quality data transformation for visualization and decision-making.

Provide analytical outputs for integration into a website dashboard.

Solution

Designed an automated data pipeline to extract, process, and store surveillance data.

Utilized API to copy and aggregate database records.

Stored structured data into Azure Data Lake for scalable and secure access.

Implemented Databricks workflows to clean, format, and transform raw data into structured outputs.

Developed an end-to-end automated process, ensuring seamless data flow from ingestion to website visualization.

Created analytical variables (chi-square, rates, percent change, suppression) for statistical insights.

Benefits

Supports thousands records, enhancing surveillance and reporting.

Automates data processing, cleaning, and transformation, reducing manual effort.

Provides real-time insights through automated data aggregation and analysis.

Enhances data accuracy and consistency by applying case definitions and statistical methodologies.

Checkout Recent Cases